kwcoco.demo.toydata_video module¶

Generates “toydata” for demo and testing purposes.

This is the video version of the toydata generator and should be prefered to the loose image version in toydata_image.

- kwcoco.demo.toydata_video.random_video_dset(num_videos=1, num_frames=2, num_tracks=2, anchors=None, image_size=(600, 600), verbose=3, render=False, aux=None, multispectral=False, multisensor=False, rng=None, dpath=None, max_speed=0.01, channels=None, background='noise', **kwargs)[source]¶

Create a toy Coco Video Dataset

- Parameters:

num_videos (int) – number of videos

num_frames (int) – number of images per video

num_tracks (int) – number of tracks per video

image_size (Tuple[int, int]) – The width and height of the generated images

render (bool | dict) – if truthy the toy annotations are synthetically rendered. See

render_toy_image()for details.rng (int | None | RandomState) – random seed / state

dpath (str | PathLike | None) – only used if render is truthy, place to write rendered images.

verbose (int) – verbosity mode, default=3

aux (bool | None) – if True generates dummy auxiliary / asset channels

multispectral (bool) – similar to aux, but does not have the concept of a “main” image.

max_speed (float) – max speed of movers

channels (str | None) – experimental new way to get MSI with specific band distributions.

**kwargs – used for old backwards compatible argument names gsize - alias for image_size

- SeeAlso:

random_single_video_dset

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_video_dset(render=True, num_videos=3, num_frames=2, >>> num_tracks=5, image_size=(128, 128)) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> dset.show_image(1, doclf=True) >>> dset.show_image(2, doclf=True)

>>> from kwcoco.demo.toydata_video import * # NOQA dset = random_video_dset(render=False, num_videos=3, num_frames=2, num_tracks=10) dset._tree() dset.imgs[1]

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> # Test small images >>> dset = random_video_dset(render=True, num_videos=1, num_frames=1, >>> num_tracks=1, image_size=(2, 2)) >>> ann = dset.annots().peek() >>> print('ann = {}'.format(ub.urepr(ann, nl=2))) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> dset.show_image(1, doclf=True)

- kwcoco.demo.toydata_video.random_single_video_dset(image_size=(600, 600), num_frames=5, num_tracks=3, tid_start=1, gid_start=1, video_id=1, anchors=None, rng=None, render=False, dpath=None, autobuild=True, verbose=3, aux=None, multispectral=False, max_speed=0.01, channels=None, multisensor=False, **kwargs)[source]¶

Create the video scene layout of object positions.

Note

Does not render the data unless specified.

- Parameters:

image_size (Tuple[int, int]) – size of the images

num_frames (int) – number of frames in this video

num_tracks (int) – number of tracks in this video

tid_start (int) – track-id start index, default=1

gid_start (int) – image-id start index, default=1

video_id (int) – video-id of this video, default=1

anchors (ndarray | None) – base anchor sizes of the object boxes we will generate.

rng (RandomState | None | int) – random state / seed

render (bool | dict) – if truthy, does the rendering according to provided params in the case of dict input.

autobuild (bool) – prebuild coco lookup indexes, default=True

verbose (int) – verbosity level

aux (bool | None | List[str]) – if specified generates auxiliary channels

multispectral (bool) – if specified simulates multispectral imagry This is similar to aux, but has no “main” file.

max_speed (float) – max speed of movers

channels (str | None | kwcoco.ChannelSpec) – if specified generates multispectral images with dummy channels

multisensor (bool) –

- if True, generates demodata from “multiple sensors”, in

other words, observations may have different “bands”.

**kwargs – used for old backwards compatible argument names gsize - alias for image_size

Todo

[ ] Need maximum allowed object overlap measure

[ ] Need better parameterized path generation

Example

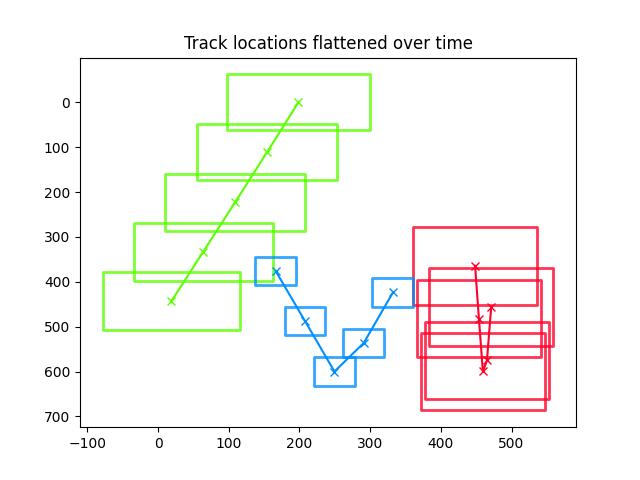

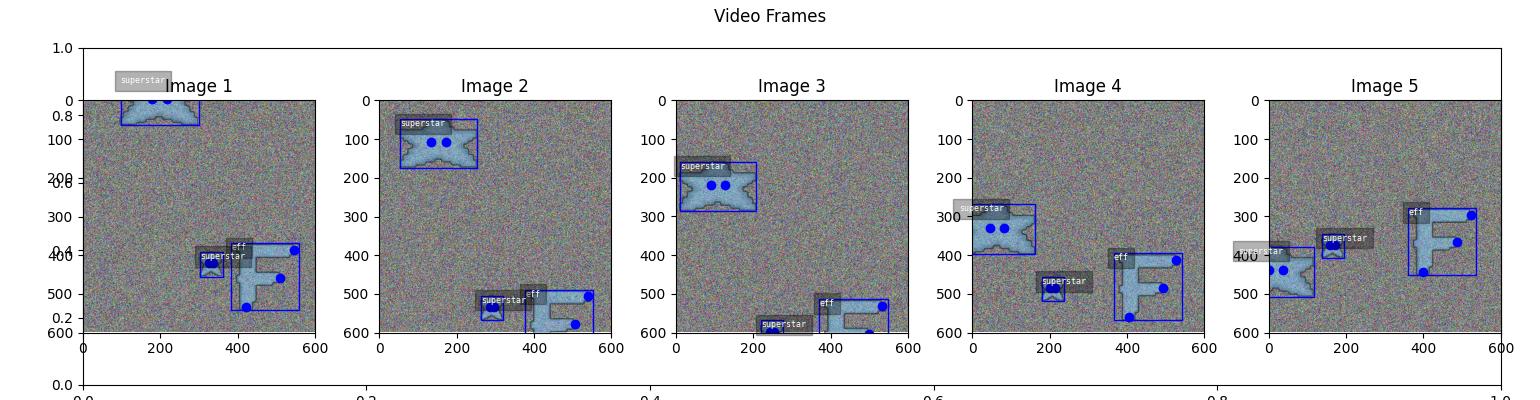

>>> import numpy as np >>> from kwcoco.demo.toydata_video import random_single_video_dset >>> anchors = np.array([ [0.3, 0.3], [0.1, 0.1]]) >>> dset = random_single_video_dset(render=True, num_frames=5, >>> num_tracks=3, anchors=anchors, >>> max_speed=0.2, rng=91237446) >>> # xdoctest: +REQUIRES(--show) >>> # Show the tracks in a single image >>> import kwplot >>> import kwimage >>> #kwplot.autosns() >>> kwplot.autoplt() >>> # Group track boxes and centroid locations >>> paths = [] >>> track_boxes = [] >>> for tid, aids in dset.index.trackid_to_aids.items(): >>> boxes = dset.annots(aids).boxes.to_cxywh() >>> path = boxes.data[:, 0:2] >>> paths.append(path) >>> track_boxes.append(boxes) >>> # Plot the tracks over time >>> ax = kwplot.figure(fnum=1, doclf=1).gca() >>> colors = kwimage.Color.distinct(len(track_boxes)) >>> for i, boxes in enumerate(track_boxes): >>> color = colors[i] >>> path = boxes.data[:, 0:2] >>> boxes.draw(color=color, centers={'radius': 0.01}, alpha=0.8) >>> ax.plot(path.T[0], path.T[1], 'x-', color=color) >>> ax.invert_yaxis() >>> ax.set_title('Track locations flattened over time') >>> # Plot the image sequence >>> fig = kwplot.figure(fnum=2, doclf=1) >>> gids = list(dset.imgs.keys()) >>> pnums = kwplot.PlotNums(nRows=1, nSubplots=len(gids)) >>> for gid in gids: >>> dset.show_image(gid, pnum=pnums(), fnum=2, title=f'Image {gid}', show_aid=0, setlim='image') >>> fig.suptitle('Video Frames') >>> fig.set_size_inches(15.4, 4.0) >>> fig.tight_layout() >>> kwplot.show_if_requested()

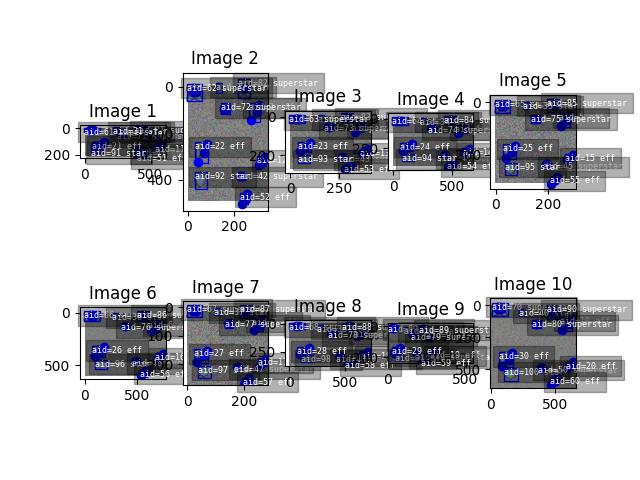

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> anchors = np.array([ [0.2, 0.2], [0.1, 0.1]]) >>> gsize = np.array([(600, 600)]) >>> print(anchors * gsize) >>> dset = random_single_video_dset(render=True, num_frames=10, >>> anchors=anchors, num_tracks=10, >>> image_size='random') >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> plt = kwplot.autoplt() >>> plt.clf() >>> gids = list(dset.imgs.keys()) >>> pnums = kwplot.PlotNums(nSubplots=len(gids)) >>> for gid in gids: >>> dset.show_image(gid, pnum=pnums(), fnum=1, title=f'Image {gid}') >>> kwplot.show_if_requested()

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_single_video_dset(num_frames=10, num_tracks=10, aux=True) >>> assert 'auxiliary' in dset.imgs[1] >>> assert dset.imgs[1]['auxiliary'][0]['channels'] >>> assert dset.imgs[1]['auxiliary'][1]['channels']

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> multispectral = True >>> dset = random_single_video_dset(num_frames=1, num_tracks=1, multispectral=True) >>> dset._check_json_serializable() >>> dset.dataset['images'] >>> assert dset.imgs[1]['auxiliary'][1]['channels'] >>> # test that we can render >>> render_toy_dataset(dset, rng=0, dpath=None, renderkw={})

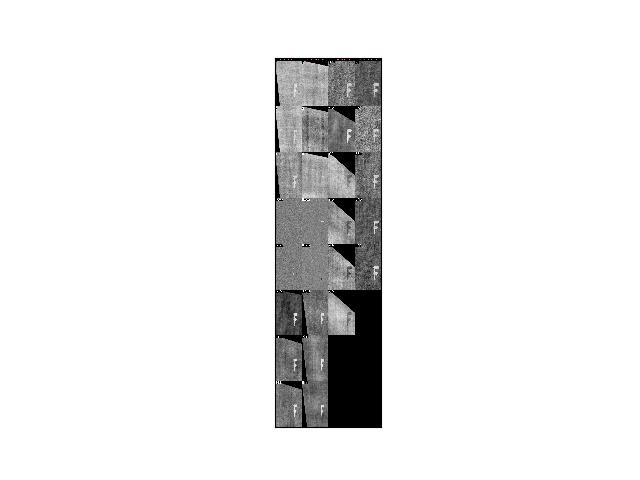

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_single_video_dset(num_frames=4, num_tracks=1, multispectral=True, multisensor=True, image_size='random', rng=2338) >>> dset._check_json_serializable() >>> assert dset.imgs[1]['auxiliary'][1]['channels'] >>> # Print before and after render >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset['images'], nl=-2))) >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset, nl=-2))) >>> print(ub.hash_data(dset.dataset)) >>> # test that we can render >>> render_toy_dataset(dset, rng=0, dpath=None, renderkw={}) >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset['images'], nl=-2))) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> from kwcoco.demo.toydata_video import _draw_video_sequence # NOQA >>> gids = [1, 2, 3, 4] >>> final = _draw_video_sequence(dset, gids) >>> print('dset.fpath = {!r}'.format(dset.fpath)) >>> kwplot.imshow(final)

- kwcoco.demo.toydata_video._draw_video_sequence(dset, gids)[source]¶

Helper to draw a multi-sensor sequence

- kwcoco.demo.toydata_video.render_toy_dataset(dset, rng, dpath=None, renderkw=None, verbose=0)[source]¶

Create toydata_video renderings for a preconstructed coco dataset.

- Parameters:

dset (kwcoco.CocoDataset) – A dataset that contains special “renderable” annotations. (e.g. the demo shapes). Each image can contain special fields that influence how an image will be rendered.

Currently this process is simple, it just creates a noisy image with the shapes superimposed over where they should exist as indicated by the annotations. In the future this may become more sophisticated.

Each item in dset.dataset[‘images’] will be modified to add the “file_name” field indicating where the rendered data is writen.

rng (int | None | RandomState) – random state

dpath (str | PathLike | None) – The location to write the images to. If unspecified, it is written to the rendered folder inside the kwcoco cache directory.

renderkw (dict | None) – See

render_toy_image()for details. Also takes imwrite keywords args only handled in this function. TODO better docs.

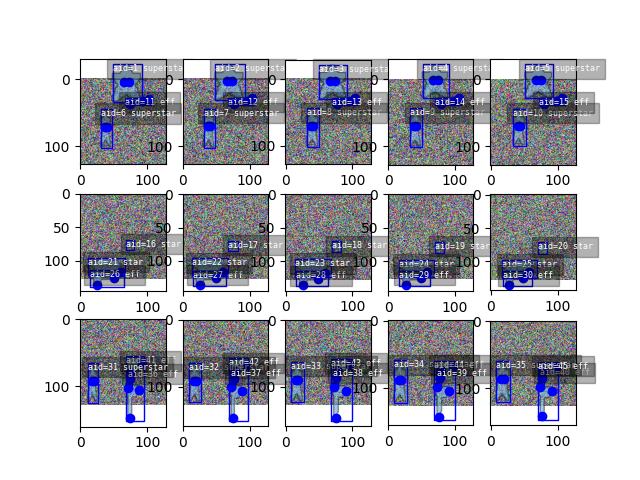

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> import kwarray >>> rng = None >>> rng = kwarray.ensure_rng(rng) >>> num_tracks = 3 >>> dset = random_video_dset(rng=rng, num_videos=3, num_frames=5, >>> num_tracks=num_tracks, image_size=(128, 128)) >>> dset = render_toy_dataset(dset, rng) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> plt = kwplot.autoplt() >>> plt.clf() >>> gids = list(dset.imgs.keys()) >>> pnums = kwplot.PlotNums(nSubplots=len(gids), nRows=num_tracks) >>> for gid in gids: >>> dset.show_image(gid, pnum=pnums(), fnum=1, title=False) >>> pnums = kwplot.PlotNums(nSubplots=len(gids))

- kwcoco.demo.toydata_video.render_toy_image(dset, gid, rng=None, renderkw=None)[source]¶

Modifies dataset inplace, rendering synthetic annotations.

This does not write to disk. Instead this writes to placeholder values in the image dictionary.

- Parameters:

dset (kwcoco.CocoDataset) – coco dataset with renderable anotations / images

gid (int) – image to render

rng (int | None | RandomState) – random state

renderkw (dict | None) – rendering config gray (boo): gray or color images fg_scale (float): foreground noisyness (gauss std) bg_scale (float): background noisyness (gauss std) fg_intensity (float): foreground brightness (gauss mean) bg_intensity (float): background brightness (gauss mean) newstyle (bool): use new kwcoco datastructure formats with_kpts (bool): include keypoint info with_sseg (bool): include segmentation info

- Returns:

the inplace-modified image dictionary

- Return type:

Dict

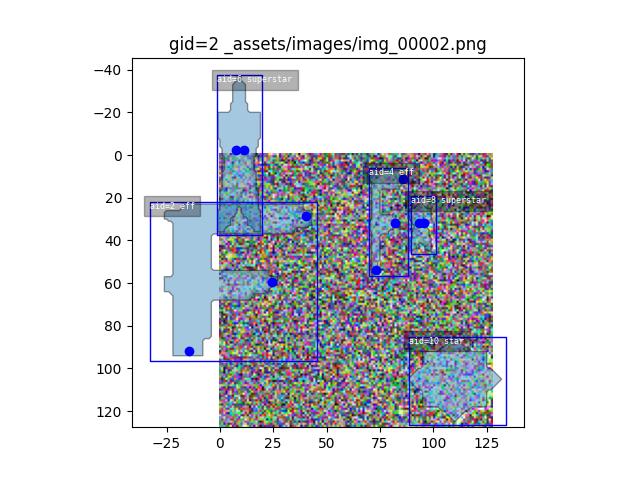

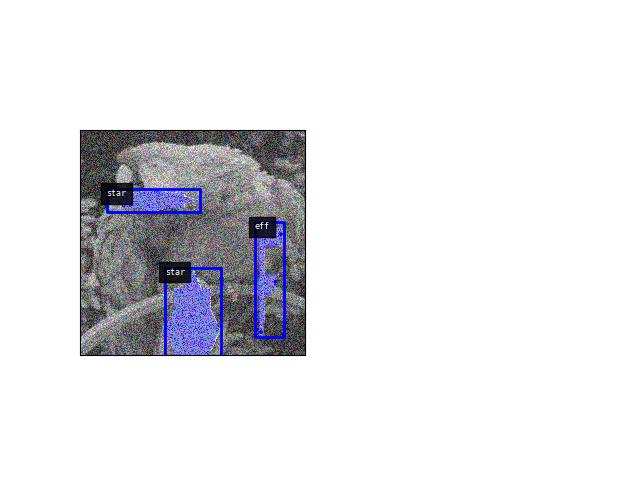

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> image_size=(600, 600) >>> num_frames=5 >>> verbose=3 >>> rng = None >>> import kwarray >>> rng = kwarray.ensure_rng(rng) >>> aux = 'mx' >>> dset = random_single_video_dset( >>> image_size=image_size, num_frames=num_frames, verbose=verbose, aux=aux, rng=rng) >>> print('dset.dataset = {}'.format(ub.urepr(dset.dataset, nl=2))) >>> gid = 1 >>> renderkw = {} >>> renderkw['background'] = 'parrot' >>> render_toy_image(dset, gid, rng, renderkw=renderkw) >>> img = dset.imgs[gid] >>> canvas = img['imdata'] >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(canvas, doclf=True, pnum=(1, 2, 1)) >>> dets = dset.annots(gid=gid).detections >>> dets.draw()

>>> auxdata = img['auxiliary'][0]['imdata'] >>> aux_canvas = false_color(auxdata) >>> kwplot.imshow(aux_canvas, pnum=(1, 2, 2)) >>> _ = dets.draw()

>>> # xdoctest: +REQUIRES(--show) >>> img, anns = demodata_toy_img(image_size=(172, 172), rng=None, aux=True) >>> print('anns = {}'.format(ub.urepr(anns, nl=1))) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(img['imdata'], pnum=(1, 2, 1), fnum=1) >>> auxdata = img['auxiliary'][0]['imdata'] >>> kwplot.imshow(auxdata, pnum=(1, 2, 2), fnum=1) >>> kwplot.show_if_requested()

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> multispectral = True >>> dset = random_single_video_dset(num_frames=1, num_tracks=1, multispectral=True) >>> gid = 1 >>> dset.imgs[gid] >>> rng = kwarray.ensure_rng(0) >>> renderkw = {'with_sseg': True} >>> img = render_toy_image(dset, gid, rng=rng, renderkw=renderkw)

- kwcoco.demo.toydata_video.render_foreground(imdata, chan_to_auxinfo, dset, annots, catpats, with_sseg, with_kpts, dims, newstyle, gray, rng)[source]¶

Renders demo annoations on top of a demo background

- kwcoco.demo.toydata_video.render_background(img, rng, gray, bg_intensity, bg_scale, imgspace_background=None)[source]¶

- kwcoco.demo.toydata_video.false_color(twochan)[source]¶

TODO: the function ensure_false_color will eventually be ported to kwimage use that instead.

- kwcoco.demo.toydata_video.random_multi_object_path(num_objects, num_frames, rng=None, max_speed=0.01)[source]¶

- kwcoco.demo.toydata_video.random_path(num, degree=1, dimension=2, rng=None, mode='boid')[source]¶

Create a random path using a somem ethod curve.

- Parameters:

num (int) – number of points in the path

degree (int) – degree of curvieness of the path, default=1

dimension (int) – number of spatial dimensions, default=2

mode (str) – can be boid, walk, or bezier

rng (RandomState | None | int) – seed, default=None

References

https://github.com/dhermes/bezier

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> num = 10 >>> dimension = 2 >>> degree = 3 >>> rng = None >>> path = random_path(num, degree, dimension, rng, mode='boid') >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> plt = kwplot.autoplt() >>> kwplot.multi_plot(xdata=path[:, 0], ydata=path[:, 1], fnum=1, doclf=1, xlim=(0, 1), ylim=(0, 1)) >>> kwplot.show_if_requested()

Example

>>> # xdoctest: +REQUIRES(--3d) >>> # xdoctest: +REQUIRES(module:bezier) >>> import kwarray >>> import kwplot >>> plt = kwplot.autoplt() >>> # >>> num= num_frames = 100 >>> rng = kwarray.ensure_rng(0) >>> # >>> from kwcoco.demo.toydata_video import * # NOQA >>> paths = [] >>> paths.append(random_path(num, degree=3, dimension=3, mode='bezier')) >>> paths.append(random_path(num, degree=2, dimension=3, mode='bezier')) >>> paths.append(random_path(num, degree=4, dimension=3, mode='bezier')) >>> # >>> from mpl_toolkits.mplot3d import Axes3D # NOQA >>> ax = plt.gca(projection='3d') >>> ax.cla() >>> # >>> for path in paths: >>> time = np.arange(len(path)) >>> ax.plot(time, path.T[0] * 1, path.T[1] * 1, 'o-') >>> ax.set_xlim(0, num_frames) >>> ax.set_ylim(-.01, 1.01) >>> ax.set_zlim(-.01, 1.01) >>> ax.set_xlabel('x') >>> ax.set_ylabel('y') >>> ax.set_zlabel('z')