kwcoco.metrics.voc_metrics module¶

- class kwcoco.metrics.voc_metrics.VOC_Metrics(classes=None)[source]¶

Bases:

NiceReprAPI to compute object detection scores using Pascal VOC evaluation method.

To use, add true and predicted detections for each image and then run the

VOC_Metrics.score()function.- Variables:

recs (Dict[int, List[dict]]) – true boxes for each image. maps image ids to a list of records within that image. Each record is a tlbr bbox, a difficult flag, and a class name.

cx_to_lines (Dict[int, List]) – VOC formatted prediction preditions. mapping from class index to all predictions for that category. Each “line” is a list of [[<imgid>, <score>, <tl_x>, <tl_y>, <br_x>, <br_y>]].

classes (None | List[str] | kwcoco.CategoryTree) – class names

- score(iou_thresh=0.5, bias=1, method='voc2012')[source]¶

Compute VOC scores for every category

Example

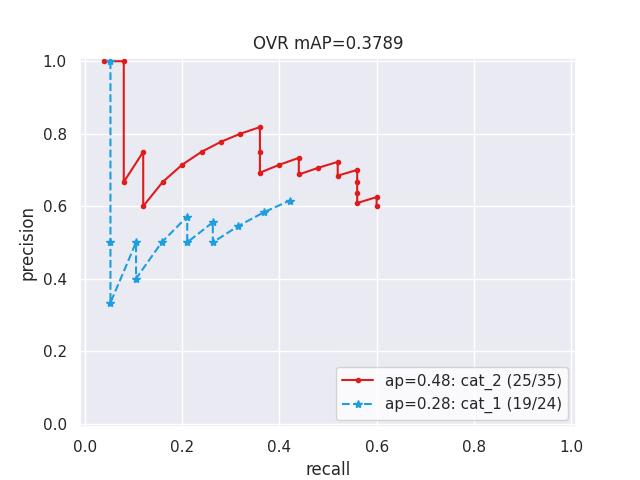

>>> from kwcoco.metrics.detect_metrics import DetectionMetrics >>> from kwcoco.metrics.voc_metrics import * # NOQA >>> dmet = DetectionMetrics.demo( >>> nimgs=1, nboxes=(0, 100), n_fp=(0, 30), n_fn=(0, 30), classes=2, score_noise=0.9, newstyle=0) >>> gid = ub.peek(dmet.gid_to_pred_dets) >>> self = VOC_Metrics(classes=dmet.classes) >>> self.add_truth(dmet.true_detections(gid), gid) >>> self.add_predictions(dmet.pred_detections(gid), gid) >>> voc_scores = self.score() >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> kwplot.figure(fnum=1, doclf=True) >>> voc_scores['perclass'].draw(key='pr')

kwplot.figure(fnum=2) dmet.true_detections(0).draw(color=’green’, labels=None) dmet.pred_detections(0).draw(color=’blue’, labels=None) kwplot.autoplt().gca().set_xlim(0, 100) kwplot.autoplt().gca().set_ylim(0, 100)

- kwcoco.metrics.voc_metrics._pr_curves(y, method='voc2012')[source]¶

Compute a PR curve from a method

- Parameters:

y (pd.DataFrame | DataFrameArray) – output of detection_confusions

- Returns:

Tuple[float, ndarray, ndarray]

Example

>>> import pandas as pd >>> y1 = pd.DataFrame.from_records([ >>> {'pred': 0, 'score': 10.00, 'true': -1, 'weight': 1.00}, >>> {'pred': 0, 'score': 1.65, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 8.64, 'true': -1, 'weight': 1.00}, >>> {'pred': 0, 'score': 3.97, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 1.68, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 5.06, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 0.25, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 1.75, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 8.52, 'true': 0, 'weight': 1.00}, >>> {'pred': 0, 'score': 5.20, 'true': 0, 'weight': 1.00}, >>> ]) >>> import kwarray >>> y2 = kwarray.DataFrameArray(y1) >>> _pr_curves(y2) >>> _pr_curves(y1)

- kwcoco.metrics.voc_metrics._voc_eval(lines, recs, classname, iou_thresh=0.5, method='voc2012', bias=1.0)[source]¶

VOC AP evaluation for a single category.

- Parameters:

lines (List[list]) – VOC formatted predictions. Each “line” is a list of [[<imgid>, <score>, <tl_x>, <tl_y>, <br_x>, <br_y>]].

recs (Dict[int, List[dict]) – true boxes for each image. maps image ids to a list of records within that image. Each record is a tlbr bbox, a difficult flag, and a class name.

classname (str) – the category to evaluate.

method (str) – code for how the AP is computed.

bias (float) – either 1.0 or 0.0.

- Returns:

info about the evaluation containing AP. Contains fp, tp, prec, rec,

- Return type:

Dict

Note

Raw replication of matlab implementation of creating assignments and the resulting PR-curves and AP. Based on MATLAB code [1].

References

[1] http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCdevkit_18-May-2011.tar

- kwcoco.metrics.voc_metrics._voc_ave_precision(rec, prec, method='voc2012')[source]¶

Compute AP from precision and recall Based on MATLAB code in [1], [2], and [3].

- Parameters:

rec (ndarray) – recall

prec (ndarray) – precision

method (str) – either voc2012 or voc2007

- Returns:

ap: average precision

- Return type:

References