kwcoco.demo.toydata module¶

Generates “toydata” for demo and testing purposes.

Note

The implementation of demodata_toy_img and demodata_toy_dset should be redone using the tools built for random_video_dset, which have more extensible implementations.

- kwcoco.demo.toydata.demodata_toy_dset(image_size=(600, 600), n_imgs=5, verbose=3, rng=0, newstyle=True, dpath=None, fpath=None, bundle_dpath=None, aux=None, use_cache=True, **kwargs)[source]¶

Create a toy detection problem

- Parameters:

image_size (Tuple[int, int]) – The width and height of the generated images

n_imgs (int) – number of images to generate

rng (int | RandomState | None) – random number generator or seed. Defaults to 0.

newstyle (bool) – create newstyle kwcoco data. default=True

dpath (str | PathLike | None) – path to the directory that will contain the bundle, (defaults to a kwcoco cache dir). Ignored if bundle_dpath is given.

fpath (str | PathLike | None) – path to the kwcoco file. The parent will be the bundle if it is not specified. Should be a descendant of the dpath if specified.

bundle_dpath (str | PathLike | None) – path to the directory that will store images. If specified, dpath is ignored. If unspecified, a bundle will be written inside dpath.

aux (bool | None) – if True generates dummy auxiliary channels

verbose (int) – verbosity mode. default=3

use_cache (bool) – if True caches the generated json in the dpath. Default=True

**kwargs – used for old backwards compatible argument names gsize - alias for image_size

- Return type:

- SeeAlso:

random_video_dset

CommandLine

xdoctest -m kwcoco.demo.toydata_image demodata_toy_dset --show

Todo

[ ] Non-homogeneous images sizes

Example

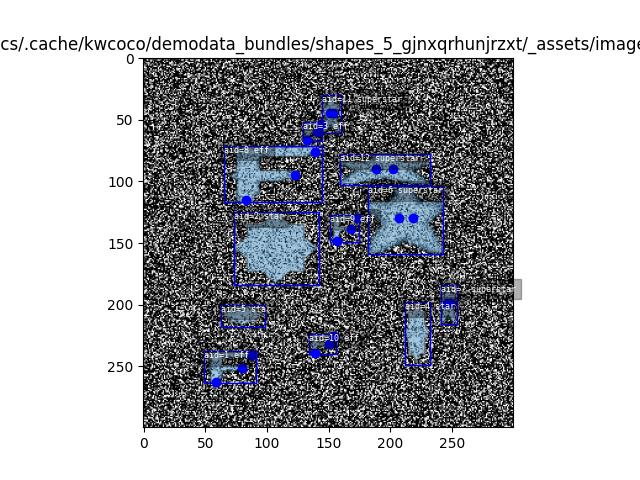

>>> from kwcoco.demo.toydata_image import * >>> import kwcoco >>> dset = demodata_toy_dset(image_size=(300, 300), aux=True, use_cache=False) >>> # xdoctest: +REQUIRES(--show) >>> print(ub.urepr(dset.dataset, nl=2)) >>> import kwplot >>> kwplot.autompl() >>> dset.show_image(gid=1) >>> ub.startfile(dset.bundle_dpath)

dset._tree()

>>> from kwcoco.demo.toydata_image import * >>> import kwcoco

dset = demodata_toy_dset(image_size=(300, 300), aux=True, use_cache=False) print(dset.imgs[1]) dset._tree()

- dset = demodata_toy_dset(image_size=(300, 300), aux=True, use_cache=False,

bundle_dpath=’test_bundle’)

print(dset.imgs[1]) dset._tree()

- dset = demodata_toy_dset(

image_size=(300, 300), aux=True, use_cache=False, dpath=’test_cache_dpath’)

- kwcoco.demo.toydata.random_single_video_dset(image_size=(600, 600), num_frames=5, num_tracks=3, tid_start=1, gid_start=1, video_id=1, anchors=None, rng=None, render=False, dpath=None, autobuild=True, verbose=3, aux=None, multispectral=False, max_speed=0.01, channels=None, multisensor=False, **kwargs)[source]¶

Create the video scene layout of object positions.

Note

Does not render the data unless specified.

- Parameters:

image_size (Tuple[int, int]) – size of the images

num_frames (int) – number of frames in this video

num_tracks (int) – number of tracks in this video

tid_start (int) – track-id start index, default=1

gid_start (int) – image-id start index, default=1

video_id (int) – video-id of this video, default=1

anchors (ndarray | None) – base anchor sizes of the object boxes we will generate.

rng (RandomState | None | int) – random state / seed

render (bool | dict) – if truthy, does the rendering according to provided params in the case of dict input.

autobuild (bool) – prebuild coco lookup indexes, default=True

verbose (int) – verbosity level

aux (bool | None | List[str]) – if specified generates auxiliary channels

multispectral (bool) – if specified simulates multispectral imagry This is similar to aux, but has no “main” file.

max_speed (float) – max speed of movers

channels (str | None | kwcoco.ChannelSpec) – if specified generates multispectral images with dummy channels

multisensor (bool) –

- if True, generates demodata from “multiple sensors”, in

other words, observations may have different “bands”.

**kwargs – used for old backwards compatible argument names gsize - alias for image_size

Todo

[ ] Need maximum allowed object overlap measure

[ ] Need better parameterized path generation

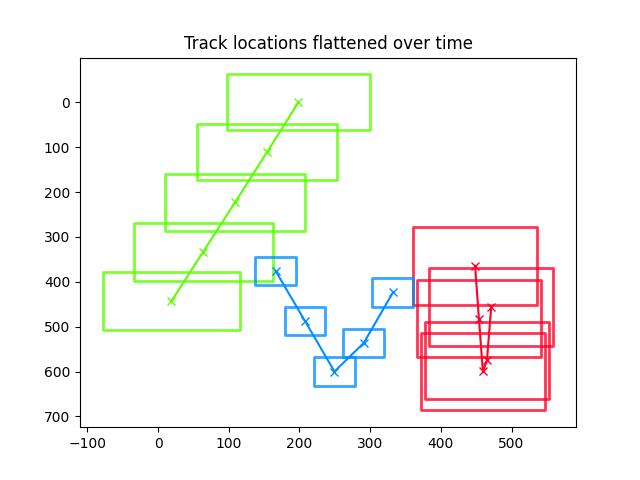

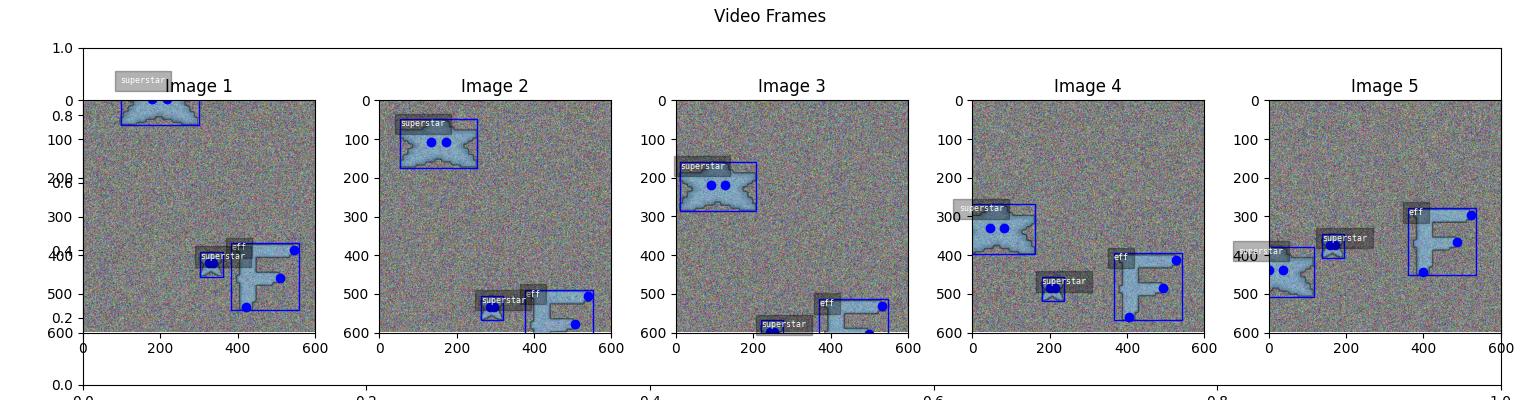

Example

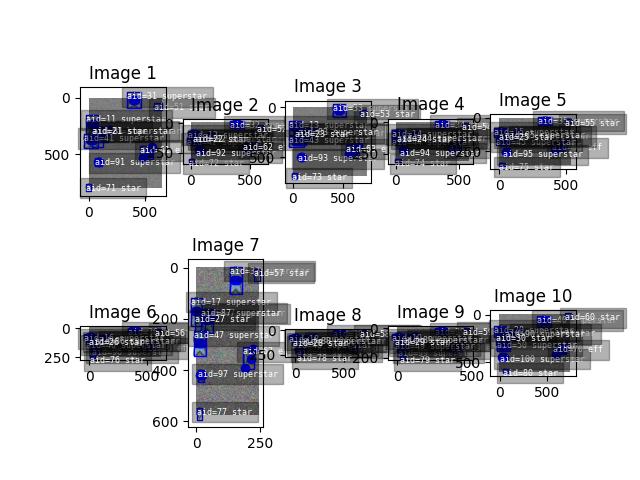

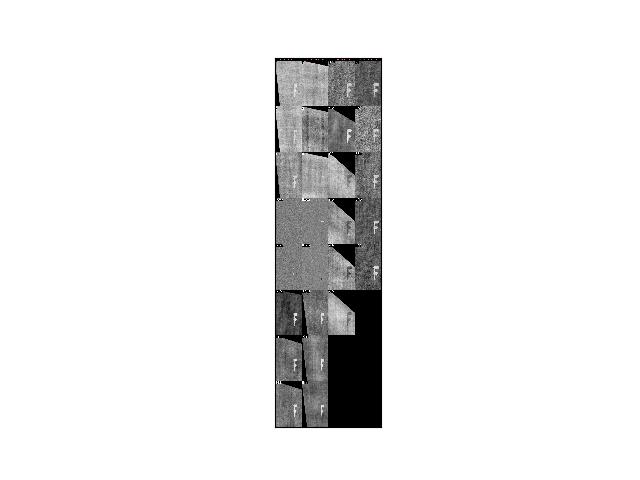

>>> import numpy as np >>> from kwcoco.demo.toydata_video import random_single_video_dset >>> anchors = np.array([ [0.3, 0.3], [0.1, 0.1]]) >>> dset = random_single_video_dset(render=True, num_frames=5, >>> num_tracks=3, anchors=anchors, >>> max_speed=0.2, rng=91237446) >>> # xdoctest: +REQUIRES(--show) >>> # Show the tracks in a single image >>> import kwplot >>> import kwimage >>> #kwplot.autosns() >>> kwplot.autoplt() >>> # Group track boxes and centroid locations >>> paths = [] >>> track_boxes = [] >>> for tid, aids in dset.index.trackid_to_aids.items(): >>> boxes = dset.annots(aids).boxes.to_cxywh() >>> path = boxes.data[:, 0:2] >>> paths.append(path) >>> track_boxes.append(boxes) >>> # Plot the tracks over time >>> ax = kwplot.figure(fnum=1, doclf=1).gca() >>> colors = kwimage.Color.distinct(len(track_boxes)) >>> for i, boxes in enumerate(track_boxes): >>> color = colors[i] >>> path = boxes.data[:, 0:2] >>> boxes.draw(color=color, centers={'radius': 0.01}, alpha=0.8) >>> ax.plot(path.T[0], path.T[1], 'x-', color=color) >>> ax.invert_yaxis() >>> ax.set_title('Track locations flattened over time') >>> # Plot the image sequence >>> fig = kwplot.figure(fnum=2, doclf=1) >>> gids = list(dset.imgs.keys()) >>> pnums = kwplot.PlotNums(nRows=1, nSubplots=len(gids)) >>> for gid in gids: >>> dset.show_image(gid, pnum=pnums(), fnum=2, title=f'Image {gid}', show_aid=0, setlim='image') >>> fig.suptitle('Video Frames') >>> fig.set_size_inches(15.4, 4.0) >>> fig.tight_layout() >>> kwplot.show_if_requested()

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> anchors = np.array([ [0.2, 0.2], [0.1, 0.1]]) >>> gsize = np.array([(600, 600)]) >>> print(anchors * gsize) >>> dset = random_single_video_dset(render=True, num_frames=10, >>> anchors=anchors, num_tracks=10, >>> image_size='random') >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> plt = kwplot.autoplt() >>> plt.clf() >>> gids = list(dset.imgs.keys()) >>> pnums = kwplot.PlotNums(nSubplots=len(gids)) >>> for gid in gids: >>> dset.show_image(gid, pnum=pnums(), fnum=1, title=f'Image {gid}') >>> kwplot.show_if_requested()

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_single_video_dset(num_frames=10, num_tracks=10, aux=True) >>> assert 'auxiliary' in dset.imgs[1] >>> assert dset.imgs[1]['auxiliary'][0]['channels'] >>> assert dset.imgs[1]['auxiliary'][1]['channels']

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> multispectral = True >>> dset = random_single_video_dset(num_frames=1, num_tracks=1, multispectral=True) >>> dset._check_json_serializable() >>> dset.dataset['images'] >>> assert dset.imgs[1]['auxiliary'][1]['channels'] >>> # test that we can render >>> render_toy_dataset(dset, rng=0, dpath=None, renderkw={})

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_single_video_dset(num_frames=4, num_tracks=1, multispectral=True, multisensor=True, image_size='random', rng=2338) >>> dset._check_json_serializable() >>> assert dset.imgs[1]['auxiliary'][1]['channels'] >>> # Print before and after render >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset['images'], nl=-2))) >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset, nl=-2))) >>> print(ub.hash_data(dset.dataset)) >>> # test that we can render >>> render_toy_dataset(dset, rng=0, dpath=None, renderkw={}) >>> #print('multisensor-images = {}'.format(ub.urepr(dset.dataset['images'], nl=-2))) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> from kwcoco.demo.toydata_video import _draw_video_sequence # NOQA >>> gids = [1, 2, 3, 4] >>> final = _draw_video_sequence(dset, gids) >>> print('dset.fpath = {!r}'.format(dset.fpath)) >>> kwplot.imshow(final)

- kwcoco.demo.toydata.random_video_dset(num_videos=1, num_frames=2, num_tracks=2, anchors=None, image_size=(600, 600), verbose=3, render=False, aux=None, multispectral=False, multisensor=False, rng=None, dpath=None, max_speed=0.01, channels=None, background='noise', **kwargs)[source]¶

Create a toy Coco Video Dataset

- Parameters:

num_videos (int) – number of videos

num_frames (int) – number of images per video

num_tracks (int) – number of tracks per video

image_size (Tuple[int, int]) – The width and height of the generated images

render (bool | dict) – if truthy the toy annotations are synthetically rendered. See

render_toy_image()for details.rng (int | None | RandomState) – random seed / state

dpath (str | PathLike | None) – only used if render is truthy, place to write rendered images.

verbose (int) – verbosity mode, default=3

aux (bool | None) – if True generates dummy auxiliary / asset channels

multispectral (bool) – similar to aux, but does not have the concept of a “main” image.

max_speed (float) – max speed of movers

channels (str | None) – experimental new way to get MSI with specific band distributions.

**kwargs – used for old backwards compatible argument names gsize - alias for image_size

- SeeAlso:

random_single_video_dset

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> dset = random_video_dset(render=True, num_videos=3, num_frames=2, >>> num_tracks=5, image_size=(128, 128)) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> dset.show_image(1, doclf=True) >>> dset.show_image(2, doclf=True)

>>> from kwcoco.demo.toydata_video import * # NOQA dset = random_video_dset(render=False, num_videos=3, num_frames=2, num_tracks=10) dset._tree() dset.imgs[1]

Example

>>> from kwcoco.demo.toydata_video import * # NOQA >>> # Test small images >>> dset = random_video_dset(render=True, num_videos=1, num_frames=1, >>> num_tracks=1, image_size=(2, 2)) >>> ann = dset.annots().peek() >>> print('ann = {}'.format(ub.urepr(ann, nl=2))) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> dset.show_image(1, doclf=True)

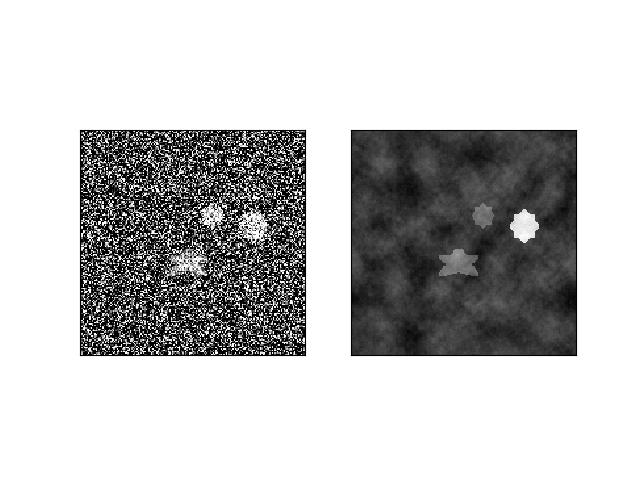

- kwcoco.demo.toydata.demodata_toy_img(anchors=None, image_size=(104, 104), categories=None, n_annots=(0, 50), fg_scale=0.5, bg_scale=0.8, bg_intensity=0.1, fg_intensity=0.9, gray=True, centerobj=None, exact=False, newstyle=True, rng=None, aux=None, **kwargs)[source]¶

Generate a single image with non-overlapping toy objects of available categories.

Todo

- DEPRECATE IN FAVOR OF

random_single_video_dset + render_toy_image

- Parameters:

anchors (ndarray | None) – Nx2 base width / height of boxes

gsize (Tuple[int, int]) – width / height of the image

categories (List[str] | None) – list of category names

n_annots (Tuple | int) – controls how many annotations are in the image. if it is a tuple, then it is interpreted as uniform random bounds

fg_scale (float) – standard deviation of foreground intensity

bg_scale (float) – standard deviation of background intensity

bg_intensity (float) – mean of background intensity

fg_intensity (float) – mean of foreground intensity

centerobj (bool | None) – if ‘pos’, then the first annotation will be in the center of the image, if ‘neg’, then no annotations will be in the center.

exact (bool) – if True, ensures that exactly the number of specified annots are generated.

newstyle (bool) – use new-sytle kwcoco format

rng (RandomState | int | None) – the random state used to seed the process

aux (bool | None) – if specified builds auxiliary channels

**kwargs – used for old backwards compatible argument names. gsize - alias for image_size

CommandLine

xdoctest -m kwcoco.demo.toydata_image demodata_toy_img:0 --profile xdoctest -m kwcoco.demo.toydata_image demodata_toy_img:1 --show

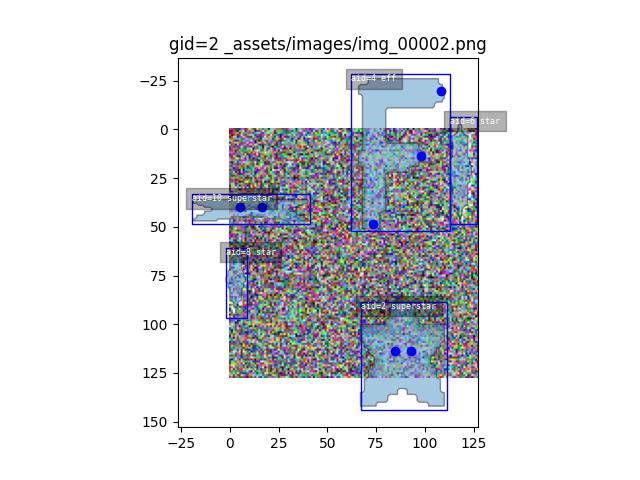

Example

>>> from kwcoco.demo.toydata_image import * # NOQA >>> img, anns = demodata_toy_img(image_size=(32, 32), anchors=[[.3, .3]], rng=0) >>> img['imdata'] = '<ndarray shape={}>'.format(img['imdata'].shape) >>> print('img = {}'.format(ub.urepr(img))) >>> print('anns = {}'.format(ub.urepr(anns, nl=2, cbr=True))) >>> # xdoctest: +IGNORE_WANT img = { 'height': 32, 'imdata': '<ndarray shape=(32, 32, 3)>', 'width': 32, } anns = [{'bbox': [15, 10, 9, 8], 'category_name': 'star', 'keypoints': [], 'segmentation': {'counts': '[`06j0000O20N1000e8', 'size': [32, 32]},}, {'bbox': [11, 20, 7, 7], 'category_name': 'star', 'keypoints': [], 'segmentation': {'counts': 'g;1m04N0O20N102L[=', 'size': [32, 32]},}, {'bbox': [4, 4, 8, 6], 'category_name': 'superstar', 'keypoints': [{'keypoint_category': 'left_eye', 'xy': [7.25, 6.8125]}, {'keypoint_category': 'right_eye', 'xy': [8.75, 6.8125]}], 'segmentation': {'counts': 'U4210j0300O01010O00MVO0ed0', 'size': [32, 32]},}, {'bbox': [3, 20, 6, 7], 'category_name': 'star', 'keypoints': [], 'segmentation': {'counts': 'g31m04N000002L[f0', 'size': [32, 32]},},]

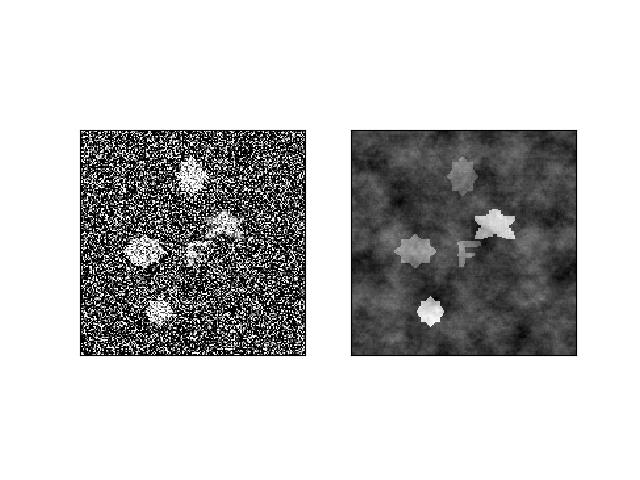

Example

>>> # xdoctest: +REQUIRES(--show) >>> img, anns = demodata_toy_img(image_size=(172, 172), rng=None, aux=True) >>> print('anns = {}'.format(ub.urepr(anns, nl=1))) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(img['imdata'], pnum=(1, 2, 1), fnum=1) >>> auxdata = img['auxiliary'][0]['imdata'] >>> kwplot.imshow(auxdata, pnum=(1, 2, 2), fnum=1) >>> kwplot.show_if_requested()

Example

>>> # xdoctest: +REQUIRES(--show) >>> img, anns = demodata_toy_img(image_size=(172, 172), rng=None, aux=True) >>> print('anns = {}'.format(ub.urepr(anns, nl=1))) >>> import kwplot >>> kwplot.autompl() >>> kwplot.imshow(img['imdata'], pnum=(1, 2, 1), fnum=1) >>> auxdata = img['auxiliary'][0]['imdata'] >>> kwplot.imshow(auxdata, pnum=(1, 2, 2), fnum=1) >>> kwplot.show_if_requested()