kwcoco.metrics.detect_metrics module¶

- class kwcoco.metrics.detect_metrics.DetectionMetrics(classes=None)[source]¶

Bases:

NiceReprObject that computes associations between detections and can convert them into sklearn-compatible representations for scoring.

- Variables:

gid_to_true_dets (Dict[int, kwimage.Detections]) – maps image ids to truth

gid_to_pred_dets (Dict[int, kwimage.Detections]) – maps image ids to predictions

classes (kwcoco.CategoryTree | None) – the categories to be scored, if unspecified attempts to determine these from the truth detections

Example

>>> # Demo how to use detection metrics directly given detections only >>> # (no kwcoco file required) >>> from kwcoco.metrics import detect_metrics >>> import kwimage >>> # Setup random true detections (these are just boxes and scores) >>> true_dets = kwimage.Detections.random(3) >>> # Peek at the simple internals of a detections object >>> print('true_dets.data = {}'.format(ub.urepr(true_dets.data, nl=1))) >>> # Create similar but different predictions >>> true_subset = true_dets.take([1, 2]).warp(kwimage.Affine.coerce({'scale': 1.1})) >>> false_positive = kwimage.Detections.random(3) >>> pred_dets = kwimage.Detections.concatenate([true_subset, false_positive]) >>> dmet = DetectionMetrics() >>> dmet.add_predictions(pred_dets, imgname='image1') >>> dmet.add_truth(true_dets, imgname='image1') >>> # Raw confusion vectors >>> cfsn_vecs = dmet.confusion_vectors() >>> print(cfsn_vecs.data.pandas().to_string()) >>> # Our scoring definition (derived from confusion vectors) >>> print(dmet.score_kwcoco()) >>> # VOC scoring >>> print(dmet.score_voc(bias=0)) >>> # Original pycocotools scoring >>> # xdoctest: +REQUIRES(module:pycocotools) >>> print(dmet.score_pycocotools())

Example

>>> dmet = DetectionMetrics.demo( >>> nimgs=100, nboxes=(0, 3), n_fp=(0, 1), classes=8, score_noise=0.9, hacked=False) >>> print(dmet.score_kwcoco(bias=0, compat='mutex', prioritize='iou')['mAP']) ... >>> # NOTE: IN GENERAL NETHARN AND VOC ARE NOT THE SAME >>> print(dmet.score_voc(bias=0)['mAP']) 0.8582... >>> #print(dmet.score_coco()['mAP'])

- enrich_confusion_vectors(cfsn_vecs)[source]¶

Adds annotation id information into confusion vectors computed via this detection metrics object.

TODO: should likely use this at the end of the function that builds the confusion vectors.

- classmethod from_coco(true_coco, pred_coco, gids=None, verbose=0)[source]¶

Create detection metrics from two coco files representing the truth and predictions.

- Parameters:

true_coco (kwcoco.CocoDataset) – coco dataset with ground truth

pred_coco (kwcoco.CocoDataset) – coco dataset with predictions

Example

>>> import kwcoco >>> from kwcoco.demo.perterb import perterb_coco >>> true_coco = kwcoco.CocoDataset.demo('shapes') >>> perterbkw = dict(box_noise=0.5, cls_noise=0.5, score_noise=0.5) >>> pred_coco = perterb_coco(true_coco, **perterbkw) >>> self = DetectionMetrics.from_coco(true_coco, pred_coco) >>> self.score_voc()

- add_predictions(pred_dets, imgname=None, gid=None)[source]¶

Register/Add predicted detections for an image

- Parameters:

pred_dets (kwimage.Detections) – predicted detections

imgname (str | None) – a unique string to identify the image

gid (int | None) – the integer image id if known

- add_truth(true_dets, imgname=None, gid=None)[source]¶

Register/Add groundtruth detections for an image

- Parameters:

true_dets (kwimage.Detections) – groundtruth

imgname (str | None) – a unique string to identify the image

gid (int | None) – the integer image id if known

- property classes¶

- confusion_vectors(iou_thresh=0.5, bias=0, gids=None, compat='mutex', prioritize='iou', ignore_classes='ignore', background_class=NoParam, verbose='auto', workers=0, track_probs='try', max_dets=None)[source]¶

Assigns predicted boxes to the true boxes so we can transform the detection problem into a classification problem for scoring.

- Parameters:

iou_thresh (float | List[float]) – bounding box overlap iou threshold required for assignment if a list, then return type is a dict. Defaults to 0.5

bias (float) – for computing bounding box overlap, either 1 or 0 Defaults to 0.

gids (List[int] | None) – which subset of images ids to compute confusion metrics on. If not specified all images are used. Defaults to None.

compat (str) – can be (‘ancestors’ | ‘mutex’ | ‘all’). determines which pred boxes are allowed to match which true boxes. If ‘mutex’, then pred boxes can only match true boxes of the same class. If ‘ancestors’, then pred boxes can match true boxes that match or have a coarser label. If ‘all’, then any pred can match any true, regardless of its category label. Defaults to all.

prioritize (str) – can be (‘iou’ | ‘class’ | ‘correct’) determines which box to assign to if mutiple true boxes overlap a predicted box. if prioritize is iou, then the true box with maximum iou (above iou_thresh) will be chosen. If prioritize is class, then it will prefer matching a compatible class above a higher iou. If prioritize is correct, then ancestors of the true class are preferred over descendents of the true class, over unreleated classes. Default to ‘iou’

ignore_classes (set | str) – class names indicating ignore regions. Default={‘ignore’}

background_class (str | NoParamType) – Name of the background class. If unspecified we try to determine it with heuristics. A value of None means there is no background class.

verbose (int | str) – verbosity flag. Default to ‘auto’. In auto mode, verbose=1 if len(gids) > 1000.

workers (int) – number of parallel assignment processes. Defaults to 0

track_probs (str) – can be ‘try’, ‘force’, or False. if truthy, we assume probabilities for multiple classes are available. default=’try’

- Returns:

ConfusionVectors | Dict[float, ConfusionVectors]

Example

>>> dmet = DetectionMetrics.demo(nimgs=30, classes=3, >>> nboxes=10, n_fp=3, box_noise=10, >>> with_probs=False) >>> iou_to_cfsn = dmet.confusion_vectors(iou_thresh=[0.3, 0.5, 0.9]) >>> for t, cfsn in iou_to_cfsn.items(): >>> print('t = {!r}'.format(t)) ... print(cfsn.binarize_ovr().measures()) ... print(cfsn.binarize_classless().measures())

- score_kwcoco(iou_thresh=0.5, bias=0, gids=None, compat='all', prioritize='iou')[source]¶

our scoring method

- score_voc(iou_thresh=0.5, bias=1, method='voc2012', gids=None, ignore_classes='ignore')[source]¶

score using voc method

Example

>>> dmet = DetectionMetrics.demo( >>> nimgs=100, nboxes=(0, 3), n_fp=(0, 1), classes=8, >>> score_noise=.5) >>> print(dmet.score_voc()['mAP']) 0.9399...

- _to_coco()[source]¶

Convert to a coco representation of truth and predictions

with inverse aid mappings

- score_pycocotools(with_evaler=False, with_confusion=False, verbose=0, iou_thresholds=None)[source]¶

score using ms-coco method

- Returns:

dictionary with pct info

- Return type:

Dict

Example

>>> # xdoctest: +REQUIRES(module:pycocotools) >>> from kwcoco.metrics.detect_metrics import * >>> dmet = DetectionMetrics.demo( >>> nimgs=10, nboxes=(0, 3), n_fn=(0, 1), n_fp=(0, 1), classes=8, with_probs=False) >>> pct_info = dmet.score_pycocotools(verbose=1, >>> with_evaler=True, >>> with_confusion=True, >>> iou_thresholds=[0.5, 0.9]) >>> evaler = pct_info['evaler'] >>> iou_to_cfsn_vecs = pct_info['iou_to_cfsn_vecs'] >>> for iou_thresh in iou_to_cfsn_vecs.keys(): >>> print('iou_thresh = {!r}'.format(iou_thresh)) >>> cfsn_vecs = iou_to_cfsn_vecs[iou_thresh] >>> ovr_measures = cfsn_vecs.binarize_ovr().measures() >>> print('ovr_measures = {}'.format(ub.urepr(ovr_measures, nl=1, precision=4)))

Note

by default pycocotools computes average precision as the literal average of computed precisions at 101 uniformly spaced recall thresholds.

pycocoutils seems to only allow predictions with the same category as the truth to match those truth objects. This should be the same as calling dmet.confusion_vectors with compat = mutex

pycocoutils does not take into account the fact that each box often has a score for each category.

pycocoutils will be incorrect if any annotation has an id of 0

a major difference in the way kwcoco scores versus pycocoutils is the calculation of AP. The assignment between truth and predicted detections produces similar enough results. Given our confusion vectors we use the scikit-learn definition of AP, whereas pycocoutils seems to compute precision and recall — more or less correctly — but then it resamples the precision at various specified recall thresholds (in the accumulate function, specifically how pr is resampled into the q array). This can lead to a large difference in reported scores.

pycocoutils also smooths out the precision such that it is monotonic decreasing, which might not be the best idea.

pycocotools area ranges are inclusive on both ends, that means the “small” and “medium” truth selections do overlap somewhat.

- score_coco(with_evaler=False, with_confusion=False, verbose=0, iou_thresholds=None)¶

score using ms-coco method

- Returns:

dictionary with pct info

- Return type:

Dict

Example

>>> # xdoctest: +REQUIRES(module:pycocotools) >>> from kwcoco.metrics.detect_metrics import * >>> dmet = DetectionMetrics.demo( >>> nimgs=10, nboxes=(0, 3), n_fn=(0, 1), n_fp=(0, 1), classes=8, with_probs=False) >>> pct_info = dmet.score_pycocotools(verbose=1, >>> with_evaler=True, >>> with_confusion=True, >>> iou_thresholds=[0.5, 0.9]) >>> evaler = pct_info['evaler'] >>> iou_to_cfsn_vecs = pct_info['iou_to_cfsn_vecs'] >>> for iou_thresh in iou_to_cfsn_vecs.keys(): >>> print('iou_thresh = {!r}'.format(iou_thresh)) >>> cfsn_vecs = iou_to_cfsn_vecs[iou_thresh] >>> ovr_measures = cfsn_vecs.binarize_ovr().measures() >>> print('ovr_measures = {}'.format(ub.urepr(ovr_measures, nl=1, precision=4)))

Note

by default pycocotools computes average precision as the literal average of computed precisions at 101 uniformly spaced recall thresholds.

pycocoutils seems to only allow predictions with the same category as the truth to match those truth objects. This should be the same as calling dmet.confusion_vectors with compat = mutex

pycocoutils does not take into account the fact that each box often has a score for each category.

pycocoutils will be incorrect if any annotation has an id of 0

a major difference in the way kwcoco scores versus pycocoutils is the calculation of AP. The assignment between truth and predicted detections produces similar enough results. Given our confusion vectors we use the scikit-learn definition of AP, whereas pycocoutils seems to compute precision and recall — more or less correctly — but then it resamples the precision at various specified recall thresholds (in the accumulate function, specifically how pr is resampled into the q array). This can lead to a large difference in reported scores.

pycocoutils also smooths out the precision such that it is monotonic decreasing, which might not be the best idea.

pycocotools area ranges are inclusive on both ends, that means the “small” and “medium” truth selections do overlap somewhat.

- classmethod demo(**kwargs)[source]¶

Creates random true boxes and predicted boxes that have some noisy offset from the truth.

- Kwargs:

- classes (int):

class list or the number of foreground classes. Defaults to 1.

nimgs (int): number of images in the coco datasts. Defaults to 1.

nboxes (int): boxes per image. Defaults to 1.

n_fp (int): number of false positives. Defaults to 0.

- n_fn (int):

number of false negatives. Defaults to 0.

- box_noise (float):

std of a normal distribution used to perterb both box location and box size. Defaults to 0.

- cls_noise (float):

probability that a class label will change. Must be within 0 and 1. Defaults to 0.

- anchors (ndarray):

used to create random boxes. Defaults to None.

- null_pred (bool):

if True, predicted classes are returned as null, which means only localization scoring is suitable. Defaults to 0.

- with_probs (bool):

if True, includes per-class probabilities with predictions Defaults to 1.

rng (int | None | RandomState): random seed / state

CommandLine

xdoctest -m kwcoco.metrics.detect_metrics DetectionMetrics.demo:2 --show

Example

>>> kwargs = {} >>> # Seed the RNG >>> kwargs['rng'] = 0 >>> # Size parameters determine how big the data is >>> kwargs['nimgs'] = 5 >>> kwargs['nboxes'] = 7 >>> kwargs['classes'] = 11 >>> # Noise parameters perterb predictions further from the truth >>> kwargs['n_fp'] = 3 >>> kwargs['box_noise'] = 0.1 >>> kwargs['cls_noise'] = 0.5 >>> dmet = DetectionMetrics.demo(**kwargs) >>> print('dmet.classes = {}'.format(dmet.classes)) dmet.classes = <CategoryTree(nNodes=12, maxDepth=3, maxBreadth=4...)> >>> # Can grab kwimage.Detection object for any image >>> print(dmet.true_detections(gid=0)) <Detections(4)> >>> print(dmet.pred_detections(gid=0)) <Detections(7)>

Example

>>> # Test case with null predicted categories >>> dmet = DetectionMetrics.demo(nimgs=30, null_pred=1, classes=3, >>> nboxes=10, n_fp=3, box_noise=0.1, >>> with_probs=False) >>> dmet.gid_to_pred_dets[0].data >>> dmet.gid_to_true_dets[0].data >>> cfsn_vecs = dmet.confusion_vectors() >>> binvecs_ovr = cfsn_vecs.binarize_ovr() >>> binvecs_per = cfsn_vecs.binarize_classless() >>> measures_per = binvecs_per.measures() >>> measures_ovr = binvecs_ovr.measures() >>> print('measures_per = {!r}'.format(measures_per)) >>> print('measures_ovr = {!r}'.format(measures_ovr)) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> measures_ovr['perclass'].draw(key='pr', fnum=2)

Example

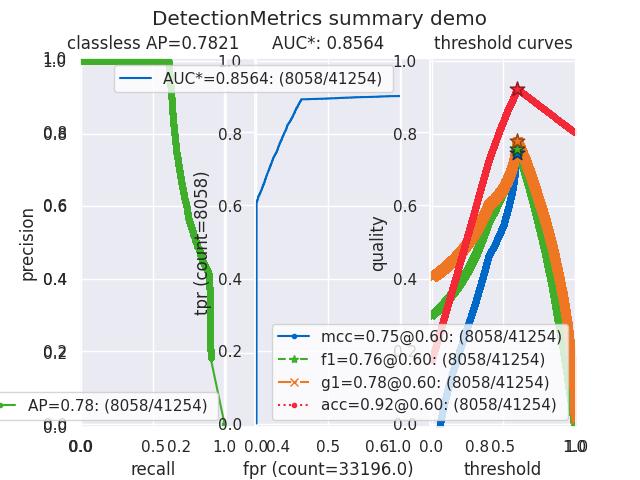

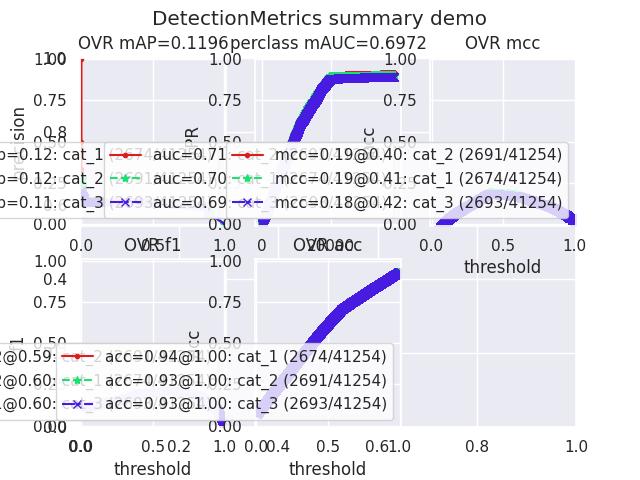

>>> from kwcoco.metrics.confusion_vectors import * # NOQA >>> from kwcoco.metrics.detect_metrics import DetectionMetrics >>> dmet = DetectionMetrics.demo( >>> n_fp=(0, 1), n_fn=(0, 1), nimgs=32, nboxes=(0, 16), >>> classes=3, rng=0, newstyle=1, box_noise=0.5, cls_noise=0.0, score_noise=0.3, with_probs=False) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> summary = dmet.summarize(plot=True, title='DetectionMetrics summary demo', with_ovr=True, with_bin=False) >>> summary['bin_measures'] >>> kwplot.show_if_requested()

- summarize(out_dpath=None, plot=False, title='', with_bin='auto', with_ovr='auto')[source]¶

Create summary one-versus-rest and binary metrics.

- Parameters:

out_dpath (pathlib.Path | None) – FIXME: not hooked up

with_ovr (str | bool) – include one-versus-rest metrics (wrt the classes). If ‘auto’ enables if possible. FIXME: auto is not working.

with_bin (str | bool) – include binary classless metrics (i.e. detected or not). If ‘auto’ enables if possible. FIXME: auto is not working.

plot (bool) – if true, also write plots. Defaults to False.

title (str) – passed if plot is given

Example

>>> from kwcoco.metrics.confusion_vectors import * # NOQA >>> from kwcoco.metrics.detect_metrics import DetectionMetrics >>> dmet = DetectionMetrics.demo( >>> n_fp=(0, 128), n_fn=(0, 4), nimgs=512, nboxes=(0, 32), >>> classes=3, rng=0) >>> # xdoctest: +REQUIRES(--show) >>> import kwplot >>> kwplot.autompl() >>> dmet.summarize(plot=True, title='DetectionMetrics summary demo') >>> kwplot.show_if_requested()

- kwcoco.metrics.detect_metrics._demo_construct_probs(pred_cxs, pred_scores, classes, rng, hacked=1)[source]¶

Constructs random probabilities for demo data

- kwcoco.metrics.detect_metrics.pycocotools_confusion_vectors(dmet, evaler, iou_thresh=0.5, verbose=0)[source]¶

Example

>>> # xdoctest: +REQUIRES(module:pycocotools) >>> from kwcoco.metrics.detect_metrics import * >>> dmet = DetectionMetrics.demo( >>> nimgs=10, nboxes=(0, 3), n_fn=(0, 1), n_fp=(0, 1), classes=8, with_probs=False) >>> coco_scores = dmet.score_pycocotools(with_evaler=True) >>> evaler = coco_scores['evaler'] >>> cfsn_vecs = pycocotools_confusion_vectors(dmet, evaler, verbose=1)

- kwcoco.metrics.detect_metrics.eval_detections_cli(**kw)[source]¶

DEPRECATED USE kwcoco eval instead

CommandLine

xdoctest -m ~/code/kwcoco/kwcoco/metrics/detect_metrics.py eval_detections_cli